One of the major challenges of a project as large and diverse as EAST is ensuring the availability of retained titles to scholars across the member libraries. In 2016 and 2017, EAST carried out validation studies in order to provide a high level of confidence in the availability of the retained titles for use by all partners. Verification of the existence and condition of retained holdings (known amongst shared print programs as “validation”) helps to build trust among partner libraries that retained volumes will, in fact, be available and usable.

In order to evaluate the statistical likelihood that a retained volume exists on the shelves of any of the institutions, EAST incorporated sample-based validation studies. The specific goals of this study were to establish and document the degree of confidence, and the possibility of error, in any EAST committed title being available for circulation. Results of the validation sample studies help predict the likelihood that titles selected for retention actually exist and can be located in the collection of a Retention Partner, and are in useable condition.

This work is documented below and published in the Journal of Collaborative Librarianship.

Results

Overall, EAST can report a 97% availability rate.

The aggregated results from both cohorts (312,000 holdings across the 52 libraries) showed:

97% of monographs in the sample were accounted for: mean: 97%, median: 97.1%, high of 99.8% and low of 91%. (Note: “accounted for” includes those items previously determined to be in circulation based on an automated check of the libraries’ ILS.)

2.3% of titles were in circulation at the time of the study

90% of the titles were deemed to be in average or excellent condition with 10% marked as in poor condition. Not surprisingly, older titles were in poorer condition.

A few notable observations included:

Items published pre-1900 were in significantly poorer condition – some 45% of these items ranked “poor” on the condition scale

An item being in poor condition was also somewhat correlated to its subject area

The most significant factor for an item being missing was the holding library.

For a full account of the results, including correlations between age and subject matter to condition of materials, see the Descriptive Analysis of the Validation Sample Study provided by Professor Grant Ritter for Cohort 1, Cohort 2 and a combined analysis.

Process

Working with a statistical consultant, Professor Grant Ritter of Brandeis University, and advised by the EAST Validation Working Group, the EAST team developed the following methodology for the study:

- Provided a sample of 6,000 items for each of the 52 libraries for a total of 312,000 items to be sampled across the two cohorts

- The sample was drawn from the data extracts the library had provided as part of the collection analysis

- Developed a data collection tool that supported easy and efficient collection of data by workers [typically student workers] in the library

- Provided documentation on how to check the library’s sample against its local circulation system to eliminate items known to be checked out before items were checked on the shelves

- Developed an easy to implement mechanism to provide a cursory check of the physical condition of the item as it was being handled by the worker

- Provided documentation to the libraries to facilitate training of their local workers.

The EAST team completed preparation for the VSS by mid-February 2016 with the data collection taking place in early spring of 2016 for Cohort 1 libraries, and in the fall of 2017 for Cohort 2 libraries.

The Data Collection Tool for the Validation Sample Study

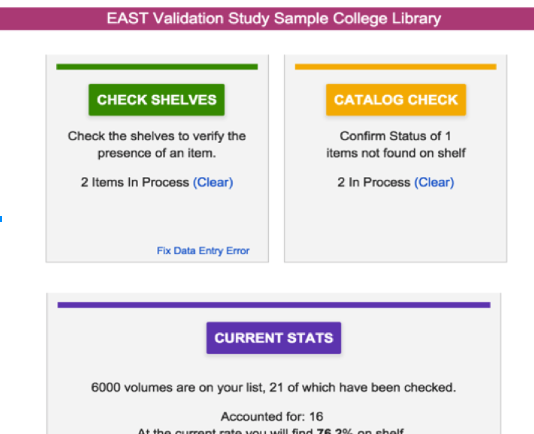

To support efficient data collection for the first sample validation study, the EAST Data Librarian, Sara Amato, worked closely with members of the Validation Working Group to design a web-based tool. The tool supported download of the random lists of titles selected from the holdings records for each of the libraries participating in the study. Prior to using the tool, librarians ran the randomized list of 6,000 titles against their current ILS to mark any items currently in circulation. Accounting for checked out volumes from the beginning ensured only items which are expected to be found present were downloaded to the tool.

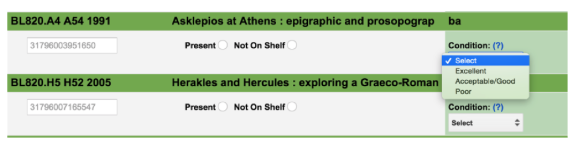

To use the tool, a student or staff worker specified the number of items they wished to check and those were loaded in location and call number order. Using a tablet, laptop or workstation with an attached barcode, the worker then scaned the first title in the list. If the item was present based on the barcode read, the tool automatically updated the status to present. The worker was then asked to do a brief condition check of the item to determine whether its condition was poor, good/acceptable or excellent. Both documentation and a short video were developed by the EAST team to provide training to the workers to simplify this check.

Although access to wifi was required for the initial download of the items to be checked and to upload the list once the checking was complete, the actual barcode scanning and condition checking could be completed offline. The tool was also designed to support multiple users simultaneously checking items in the stacks.

Because the data collection tool reported back results in real time as groups of items checks were submitted, the EAST team had up-to-date access to the availability metrics across the EAST libraries. Upon completion of the data collection, results [anonymized except for reporting to the local library] were shared across the EAST membership.

How the Validation Data Collection Tool Works

The data collection tool allowed workers to check the shelves to determine whether or not items expected to be available on shelf were present. Items which had been identified as being checked out or missing were not presented by the tool for shelf validation. For items that were not found on the shelf but were expected to be present, the worker could perform a manual check against the catalog using the tool. Whenever possible, the data downloaded to the tool included barcodes so that the workers could use a barcode reader attached to the laptop or tablet and simply scan the item’s barcode to confirm presence.

As shown above, once a worker can scanned the barcode, the tool recorded it as “Present”. At that point, the worker performed a cursory examination of the physical item and selected the description that best reflected its condition: Excellent, Acceptable/Good or Poor.

Validation Sample Study: Informing Retention Decisions

The Validation Sample Study with Cohort One libraries took place concurrently with the collection analysis being done with SCS. Unfortunately, this meant that the results of the validation study were not yet available to inform the collection analysis model being used to determine which titles libraries would agree to retain. Of the 240,000 items sampled in the validation study, 92,575 subsequently received retention commitments, providing a large enough sample of the ‘collective collection’ to do statistically valid predictive modeling.

Using data from the Cohort 1 Validation Sample Study and data on the full holdings of the 40 EAST Cohort One libraries, Professor Ritter identified 77,925 titles (.01% of the collective collection at that time) as having a greater than 7.5% chance of being missing or a greater than 50% chance of being in poor condition. These were titles with only one copy being retained by EAST which had unallocated surplus copies at other EAST libraries. These titles were then provided back to the member libraries holding surplus copies with the highest validation scores as potential additional retention candidates. The majority of these additional copies of at risk titles were accepted as additional retention commitments at Cohort One libraries.

Some 5,000 of the 77,000+ titles were not accepted by the owning library as additional retentions for various reasons, (e.g. not on shelf or the owning library was not willing to take on additional retentions), and approximately 9,000 of the titles determined to be at risk had no surplus copies in EAST Cohort One. These were eventually passed on as potential retention candidates in Cohort Two, where most were able to be retained.

This was, to our knowledge, the first time that validation sampling had been used to inform retention modeling for shared print.